Competition

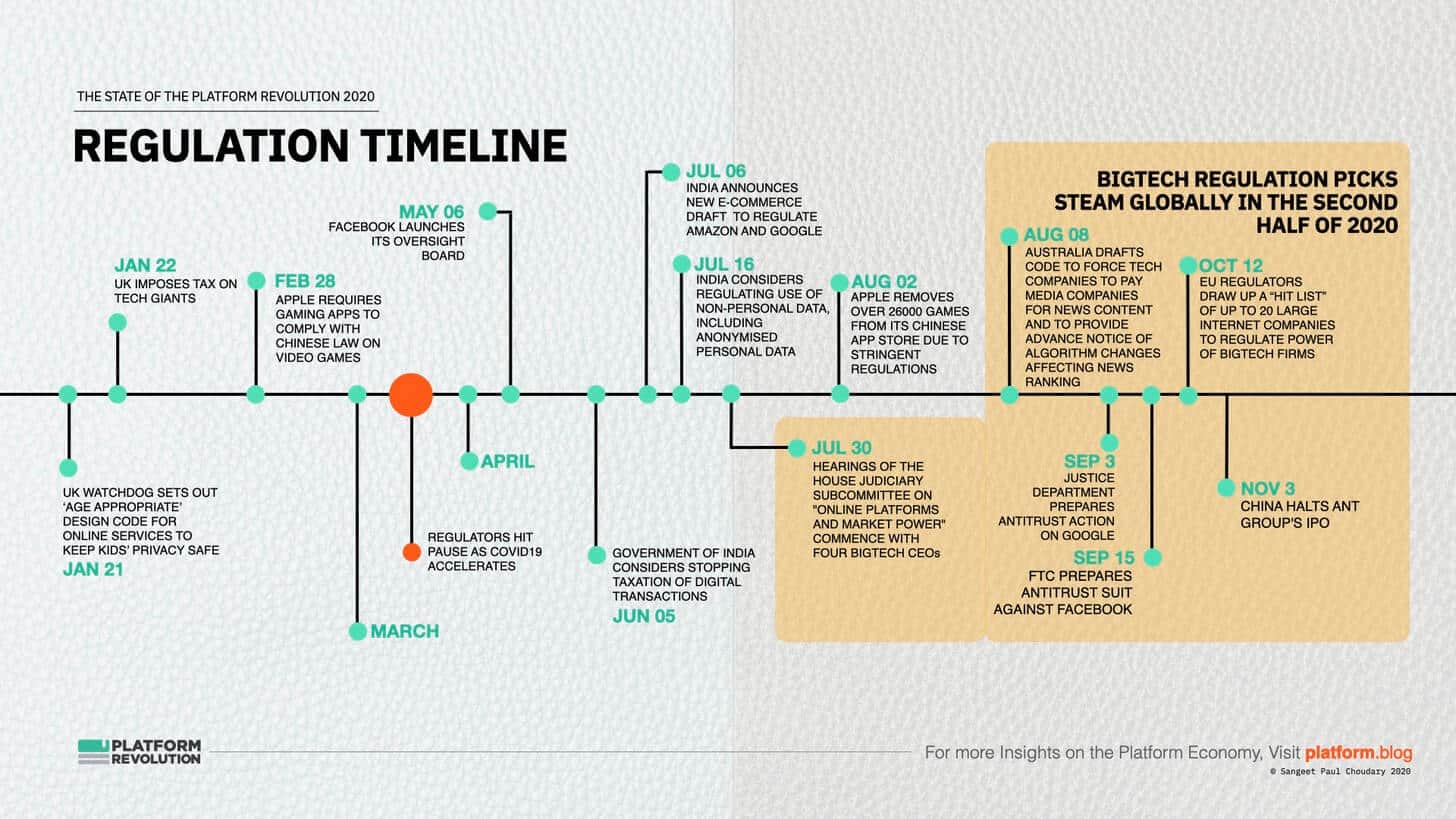

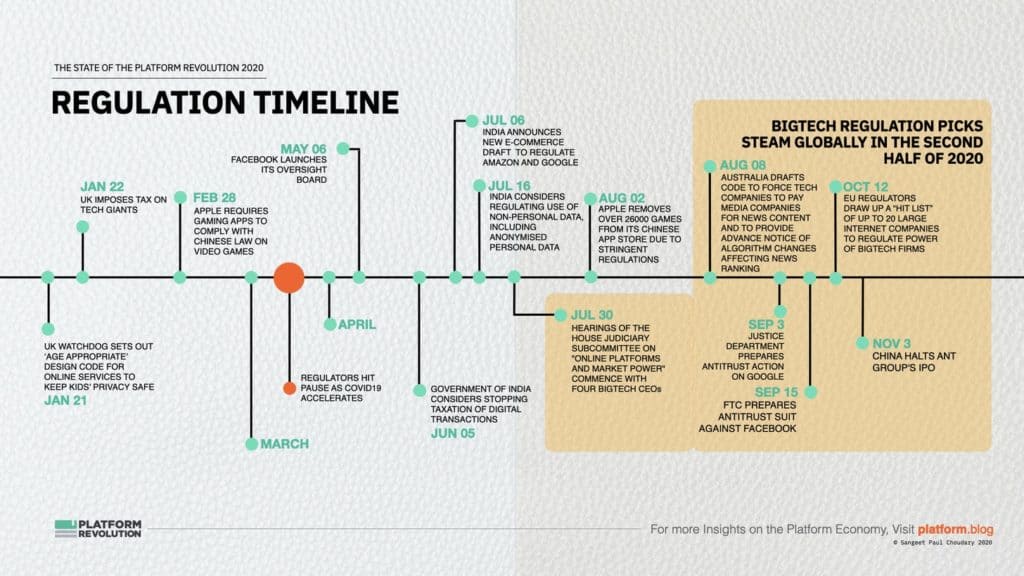

The age of platform regulation is finally here

Regulatory response to BigTech’s power play

Last week, 46 states across the US hit Facebook with a massive lawsuit. As recently as yesterday (Dec 12, 2020) California joined the US antitrust lawsuit against Google. Meanwhile, Cydia – the iPhone App Store before the iPhone tied into its own Apple-owned App Store – has jumped on the antitrust bandwagon and filed a lawsuit against Apple citing anti-competitive moves towards tying the iPhone and the App Store.

As 2020 draw to a close, the lull in regulation that we saw in the first half (thanks to Covid) is all behind us as regulators step up their efforts to address the monopoly power of BigTech. 2021 is poised to be a landmark year for platform regulation. This is one of the key themes covered in my end of year report.

As this timeline demonstrates, regulation is just about picking momentum towards a series of changes in 2021.

This issue covers four key topics:

- The dark side of platform dominance

- How moving fast and breaking things combined with archaic antitrust laws have let BigTech run unchecked far too long

- Four schools of thought on platform regulation

- Design principles for platform regulation and unintended consequences of over-regulation

The dark side of platform dominance

As platforms set up control points and competitive bottlenecks to establish dominance in their ecosystem, they often act against the interests of other actors in their ecosystem. This leads to several scenarios where ecosystem participants, and society and markets at large, may get adversely affected because of the platform’s dominance. Effective regulatory mechanisms must target these control points.

First, several B2C platforms leverage their control over market access or data to directly compete with other players in the ecosystem. Google has been extensively investigated and fined for unfair practices where it pushed down rivals in Google search results, while several researchers have demonstrated how Amazon uses its oversight over market-wide data on its platform to selectively compete with merchants on the platform.

Second, platforms like Facebook and Google have been under increasing scrutiny on their data usage practices. Facebook’s news feed and Google’s search service are important control points that enable these platforms to constantly harvest data from users, often more data than is required for the improvement of their services. This data, in turn, serves as a control point over brands and advertisers looking to influence these users.

Third, platforms may start as open systems but increasingly exert greater control over the ecosystem, without prior warning to ecosystem members and to their detriment. Google has been criticized for creating an increasing number of control points over Android, while platforms like Twitter and Uber have often changed policies to the detriment of actors in their ecosystems.

Some platforms also engage in unfair and differential treatment of their ecosystem partners. For instance, Amazon and Flipkart have been charged by the Competition Commission of India (CCI) with preferential treatment to certain sellers, in exchange for bilateral arrangements that favor the platform. Both platforms have been accused of offering deep discounts and preferential listings to certain sellers, to the detriment of other sellers.

Another aspect of fairness deals with the inability to audit algorithms used by platforms. Since platform algorithms determine market access and consequently market power for ecosystem participants, the black-boxing of such algorithms prevents regulatory scrutiny.

Platforms may also commoditize ecosystem players by intensifying competition among them. For instance, Amazon’s Buy Box is a highly contested battleground, which increases competition among merchants, increasingly commodifying them and impacting their margins. When multiple sellers offer the same product, Amazon’s algorithms determine the seller who should be featured on the Buy Box. This is very important because more than 80-90% of Amazon’s sales are via its Buy Box. While the Buy Box reduces search costs for consumers and helps create a more efficient market, smaller merchants joining are negatively impacted by this intensified competition.

Finally, as more services and work move into the platform economy, platforms also use their dominant power to exploit workers and service providers in their ecosystem. In a paper for the ILO’s Future of Work Commission, I elaborated on the conditions under which platforms like Uber and Deliveroo exploit drivers in their ecosystem. I’ve also discussed this in more detail in the theme on the gig economy.

The examples above represent only a handful of issues wrought by today’s dominant platforms. As the platform economy grows, understanding control points will be key to understanding the changing power structures across the platform economy. Regulation, accordingly, will be successful only if it addresses these issues.

An insufficient antitrust model

Antitrust statutes to protect competitive markets were built out in the industrial era. These statutes worked at a time when markets were comparatively stable due to steady technology. But with rapid shifts in technology, companies can easily take advantage of customers and eliminate competition. Antitrust statutes do not address more nuanced competitive issues and monopoly definitions break down when platforms seemingly guarantee consumer welfare by provisioning subsidized services. These frameworks fail to account for the consumer as worker and creator (at a minimum, through the generation of data) and the exploitation of data as the work product.

Moving Fast Breaks More Things

Silicon Valley’s “Move Fast and Break Things” refrain has led to digital platforms making their own rules. The technology industry usually leaves the quality and damage control of minimum viable products to the end user. While this worked at a small scale previously (even for Google and Facebook), it led to unforeseen problems as these platforms started scaling economic influence. Seemingly innocent experiments – A/B testing – on peer-to-peer lending platforms, for instance, create market fairness issues. False positives spewed out by facial recognition algorithms are proof of what happens when new technology is released without any safeguards. Racial and gender bias has been prominent in facial recognition software and AI, including those used by law enforcement. Just like how medicines and chemicals are required to adhere to safety standards, tech platforms too should demonstrate compliance with fit-for-market standards, before entering the market. Platforms should be held financially accountable for any damage done due to their products.

The US, EU, and Asia have stepped up regulatory action against BigTech platforms

Feel Free to Share

Download

Download Our Insights Pack!

- Get more insights into how companies apply platform strategies

- Get early access to implementation criteria

- Get the latest on macro trends and practical frameworks

No liability, no incentive?

Platforms have less liability and no incentive to reduce distribution of harmful content as long as such distribution serves to scale their business models. Facebook, Instagram, YouTube, and Twitter make money out of advertising which relies on user attention. Platforms use algorithms to push controversial content like hate speech, disinformation, conspiracy theories, as they draw attention and amplify user engagement. Recommendation engines put out potentially harmful content as long as there is no economic incentive for them to stop doing so. In the US, platforms enjoy protection from liability due to Section 230 of the Communications Decency Act 1996, which provides platforms immunity for any harm caused by third party content.

Regulation of data privacy

Prof. Shoshana Zuboff from Harvard says that personal data should be treated like body organs and as a human right rather than as an asset that can be bought or sold. According to Zuboff, no corporation should be allowed to use private data to influence user choices.

Of course, blanket regulation of data would come rife with issues. Some regulations like Europe’s General Data Protection Regulation (GDPR) and the California Computer Privacy Act (CCPA) urge consumers to opt out of data usage by companies. But this is problematic as consumers are usually unaware of how their data are exploited by corporations.

Moreover, much of value creation in the digital economy relies on effective use of data to improve market interactions and consumer decisions. Over-regulation aimed at ensuring privacy runs the risk of imposing too many controls over data acquisition by platforms, which could in turn directly impact the platform’s ability to enable efficient markets.

Regulators will need robust frameworks explaining uses of data that benefit consumers and markets and those that are purely exploitative and aid value concentration towards a single actor, typically the platform owner. Ideally, regulation would deal with data ownership and usage rights in a manner that not only enabled the platform to create an efficient market but also protected the ecosystem actors and assisted the bodies that represent them.

Whither regulation? – Various schools of thought

Should platforms be regulated? Various schools of thought have emerged over the past several years.

- Platform bans

Several jurisdictions have taken an extreme approach of entirely banning platforms which do not comply with existing regulations.This is unlikely to prove to be a nuanced or sustainable solution. Bans are often championed by lobbying incumbents, who seek to protect a traditional advantage, and these bans run the risk of disincentivizing innovation entirely. Moreover, the imposition of bans is far from uniform, and this inconsistency tends to produce a fragmented regulatory landscape which can impede concerted and consistent regulatory action against the platform. More importantly, a fragmented regulatory landscape also has larger systemic effects, such as the migration of technology firms to jurisdictions with lighter regulation, with long-term repercussions for cities and countries imposing the ban.

- No regulation is good regulation

Another response, at the opposite end of the regulatory spectrum, is the complete absence of regulation. Some scholars argue that traditional regulation, when applied to platforms, will lead to over-regulation, thereby curtailing all benefits that labour platforms create. Some proponents of eliminating regulation go so far as to suggest that, because the interests of the platform are intrinsically aligned with those of the ecosystem, platforms will naturally be motivated to invest in ecosystem protection. However, as already demonstrated across jurisdictions, “no regulation” is unlikely to be a practical approach that is widely adopted.

- Self-regulation

A third related argument champions self-regulation by the platform. Self-regulation is frequently proposed as a feasibly implemented solution due to the information asymmetry that exists between the platform and other stakeholders, including the traditional regulator. The argument for self-regulation rests on two key pillars: first, that reputation systems are effective in guaranteeing market efficiency, and, second, that market efficiency is aligned with positive outcomes for all platform stakeholders. However, both these arguments are contentious. Reputation systems can be manipulated and biased. While self-regulation may work to the extent that it creates an efficient market, it is unlikely to be successful as a means to empower all actors when their interests are at odds with the interests of the platform owner. Though flawed, the argument for self-regulation throws a welcome but harsh light on the need for regulation to expand visibility into the opaque data and obscure workings of platforms. An independent regulator is required to ensure fair competition among platforms; delegating regulatory responsibility to the platform owner because of their exclusive access to this data is not a solution.

- Using narrative to sidestep regulation

Platform promoters use the phrases “sharing economy” and “collaborative consumption”, which conjure a positive image of platforms in general, and on-demand platforms in particular. These narratives present the platform as an intermediary that facilitates efficient, sustainable and decentralized markets in a manner that needs no regulation. However, the concept of sharing can be cynically obfuscated. Platforms such as Couchsurfing, which started as not-for-profit intermediaries, enabling sharing among participants, have moved on to create for-profit businesses, focused on maximizing shareholder value, sometimes to the detriment of existing stakeholders. These decentralized production systems encourage a culture of sharing but are answerable to centralized governance and funding; the sharing economy narrative of altruism and socialism is secondary to the platform’s profit- seeking behaviour. While for-profit platforms may also encourage a culture of sharing, the eventual centralization of profits and maximization of shareholder value are at odds with the overall narrative. More specifically, these platforms may improve market access and generate additional surplus but this does not imply that such surplus is equitably distributed among all stakeholders. Any regulatory framework should ensure that these narratives do not function as a ploy to sidestep regulation while maintaining control, information asymmetry, and profit centralization that could lead to ecosystem exploitation.

Crafting regulatory responses

Regulators need to work towards the creation of appropriate ecosystem protection and empowerment, while ensuring that such regulation is applied not at the point of market entry but subsequent to it, using actual data from platform usage.

Data plays an important role in creating value and establishing power dynamics on the platform. Data enables the creation of efficient markets. Both consumer and producer behaviour can be influenced using data. Data also creates enforced dependency for various platform users. Finally, the platform’s exclusive ownership of data also creates greater information asymmetry between the platform and all other stakeholders.

An expandable and effective regulatory framework for platforms must be centred around the regulation of data. To that end, the regulatory framework should involve four key components:

1. Decreasing information asymmetry between platform and ecosystem actors

Several patterns of ecosystem exploitation on platforms can be traced back to the information asymmetry that exists between the platform and its ecosystem. Decreasing information asymmetry would increase the bargaining power of the ecosystem.

2. Reducing ecosystem dependency driven by proprietary data that locks-in users

If a reputation data for producers is locked to a specific platform, it prevents them from moving to other platforms and further reduces their bargaining power.

3. Regulating through open data

The exclusive ownership of data by the platform also serves to obstruct effective regulation. Lacking visibility into actual behaviours on the platform, regulators resort to traditional regulation, which can often impede innovation without increasing ecosystem empowerment. At its most extreme, regulators may choose to ban a platform outright. Instead, platforms should cooperate with regulators by facilitating external access to their data. The incentive to do so would be much lower regulation upfront. Access to data would be heavily curated to alleviate concerns that third parties could gain insight into a platform’s carefully nurtured competitive strengths. Regulators and platform owners would therefore need to work together to identify data that offer an understanding of relevant market behaviour without reducing the platform’s competitiveness.

4. Enabling alternate regulatory structures on the data

Even as platforms agree to provide access to their data, regulators must set up more agile and decentralized regulatory structures. With data access, the regulatory structure itself could work like a multi-sided platform. Platform users would act as producers of data. These data could be consumed through API access by third parties. This would allow regulators to set up overall regulatory guidelines and empower third parties such as research agencies to analyse the data and propose regulatory interventions based on actual market behaviour. This would also allow regulation to expand at the rate of innovation. Just as platforms exploit decentralized value creation, so this form of co-developed regulation would allow regulators to exploit decentralized regulation, keeping pace with innovation on the platform.

The unintended consequences of regulation

It’s now fairly clear that platforms have failed at self-regulation. Thoughtful and comprehensive regulations are required to save democracy, public health, privacy, and competition in the economy. But regulations come with consequences.

Critics have warned of harder detection of online child sexual abuse due to privacy protections that are meant to be executed towards the end of 2020 in the European Union. As per the new regulations, big tech firms like Facebook and Microsoft will be forbidden from using automatic detection tools used to detect online grooming or images of child abuse. Critics of such tools contend that automatic scanning infringes on people’s privacy, especially those using chat and messaging apps. Those opposing the European Electronic Communications Code directive worry that banning detection tools in Europe could lead to firms halting their usage elsewhere in the world. “If a company in the EU stops using this technology overnight, they would stop using it all over the world,” said Emilio Puccio, coordinator of the European Parliament Intergroup on Children’s Rights.

As regulation speeds up in 2021, regulators will need to ensure that regulation effectively safeguards ecosystem interests without stifling data-driven innovation. Regulators will also need to coordinate across other actors and advocates to understand potential unintended consequences and craft regulations that mitigate them.

The return of regulation for the platform economy is one of the key themes I explore in the platform economy playbook.

State of the Platform Revolution

The State of the Platform Revolution report covers the key themes in the platform economy in the aftermath of the Covid-19 pandemic.

This annual report, based on Sangeet’s international best-selling book Platform Revolution, highlights the key themes shaping the future of value creation and power structures in the platform economy.

Themes covered in this report have been presented at multiple Fortune 500 board meetings, C-level conclaves, international summits, and policy roundtables.

Subscribe to Our Newsletter